About

Notes on a family of related software systems, a particular software architecture, developed by Douglas Hofstadter and his team, the Fluid Analogies Research Group (FARG), for the purpose of capturing and studying certain aspects of human cognition.

This architecture — which, I propose, should bear the name of its principal investigator — was the result of a sustained attempt to replicate or simulate some of the mechanisms by which we humans do our thinking. Hofstadter's principal interest has always been analogies and their role in cognitive processes. With these systems, Hofstadter wanted to explore conceptual fluidity, the way in which we make certain "leaps" in our thinking based on situational similarity.

Hofstadter, together with his students, focused on computationally lightweight microdomains that were easy to control, but were still compelling enough to invoke complex cognitive function in humans. Starting with the (bio)logically inspired ideas of analogy-making and emergent, recurrent cognitive processes that Hofstadter explored in his remarkable book GEB, the "FARGonauts" went on to experiment with algorithmic cognition in domains ranging from seemingly simple number and letter substitutions all the way to creative rule-making and complex pattern matching.

This piece explores some of this territory, presenting the main features and functions of the Hofstadterian cognitive system architecture. Most of the content here is adapted from Hofstadter's research retrospective book, Fluid Concepts and Creative Analogies (1995), particularly the discussion on the Copycat program.

Be sure to check out Alex Linhares' FARGonautica research repository for more details on the group's output, including some software sources.

Introduction

If you've ever wondered whether anybody ever took the ideas from GEB and tried to build software systems out of them, then I've got just the book for you. "Fluid Concepts and Creative Analogies" (FCCA; Basic Books, 1995) is a collection of papers from the members of the Fluid Analogies Research Group (FARG), complete with chapter by chapter commentary from the team lead, Professor Douglas Hofstadter.

Subtitled "Computer Models of the Fundamental Mechanisms of Thought", this compilation book summarises research done primarily at the Fargonaut base at Indiana University between the late 1970s and the early 1990s. FCCA covers system studies and other writing in roughly chronological order, sharing the origin story and development of a "cognitive" software architecture. The book tells the story of a family of software systems designed and built for the study of analogies, "fluid concepts" — computer models of mental processes, or what pass for intelligent behaviour in minds and machines.

On the surface, Hofstadterian architecture is little more than a convoluted metaheuristic algorithm for finding rules for little creative puzzles. And yet, there is method in it. In GEB, Hofstadter argued that strange looping structures and complex emergent phenomena lie at the heart of one of the greatest puzzles of them all, the secret of consciousness. Hofstadter resolved to study these with his research group. The FARG systems are a testament to an ambitious research programme that attempted to construct cognition from the ground up.

Hofstadter and his team focused on microdomains, highly manageable virtual research environments, that try to capture or expose the essence of specific cognitive phenomena. This orientation towards idealised, lightweight domains and principled methods over impressive "real world" results led the FARG effort away from mainstream AI. Hofstadter and his students explored unfashionable evolutionary computing ideas at a time when the community was centred around hand-tuned expert systems and formalist old school AI.

There was little demand for original research of this kind, research without flashy benchmarks to point at and no revolutionary applications to immediately enable. Hofstadter was interested in studying the subtleties of human cognition: the FARG systems were certainly not you grandfather's AI. On the other hand, FARG output failed to rise above the deluge of bio-inspired handwaving (Sörensen, 2012) which has long marred the neighbouring discipline of operations research. In any case, Hofstadter saw the writing on the wall, made a clean break from a community he didn't feel connected to, and has never found his way back to mainstream computing circles.

Is there a bright idea here, or is it all just a convoluted metaheuristic soup? Are these methods any good in practice? What are the problems? Are there better ways to produce results of this kind?

By modern standards, these '80s computing research efforts have a distinctly quaint feel to them, but at the same time the FARG approach is still novel and fresh in a sense, perhaps even unexplored. Maybe it's all just convoluted metaheuristics play, maybe there's a valid intent behind it. In any case, if you believe that neural networks, linear algebra, and symbolic logic is all there is to AI, this stuff might give you some pause.

I believe that there is something here, something that could be worth another look now, some decades later. For one, the focus on microdomains for general cognitive tasks is certainly still worthwhile: the mechanisms and peculiar facets of human cognition are still likely best studied in as much isolation as possible. Who knows what can be achieved by exploring those worlds a little more with modern hardware and techniques?

Our objective here is to get a feel for the key ideas behind FARG research in cognitive science. We are interested in how the group explored what they call the "fluidlike properties of thought". We want to gain an appreciation for the systems they developed, through an understanding of their composition. Even if particular components evolved over time, all FARG systems share the same driving force and foundation — the Hofstadterian architecture.

Hofstadterian Architecture

There is no standard umbrella term that ties together all FARG software systems, though they clearly share a common ancestry. Professor Melanie Mitchell, a former student of Hofstadter's, uses the term 'active symbol architecture' to describe her Copycat. Others use the term 'FARGitecture', to emphasise the collaborations and the team effort that gave rise to these systems. In FCCA, Hofstadter makes use of the more technically oriented term "Parallel terraced scan". All of these mean more or less the same thing. Indeed, it is rather peculiar, even concerning, that no name has stuck.

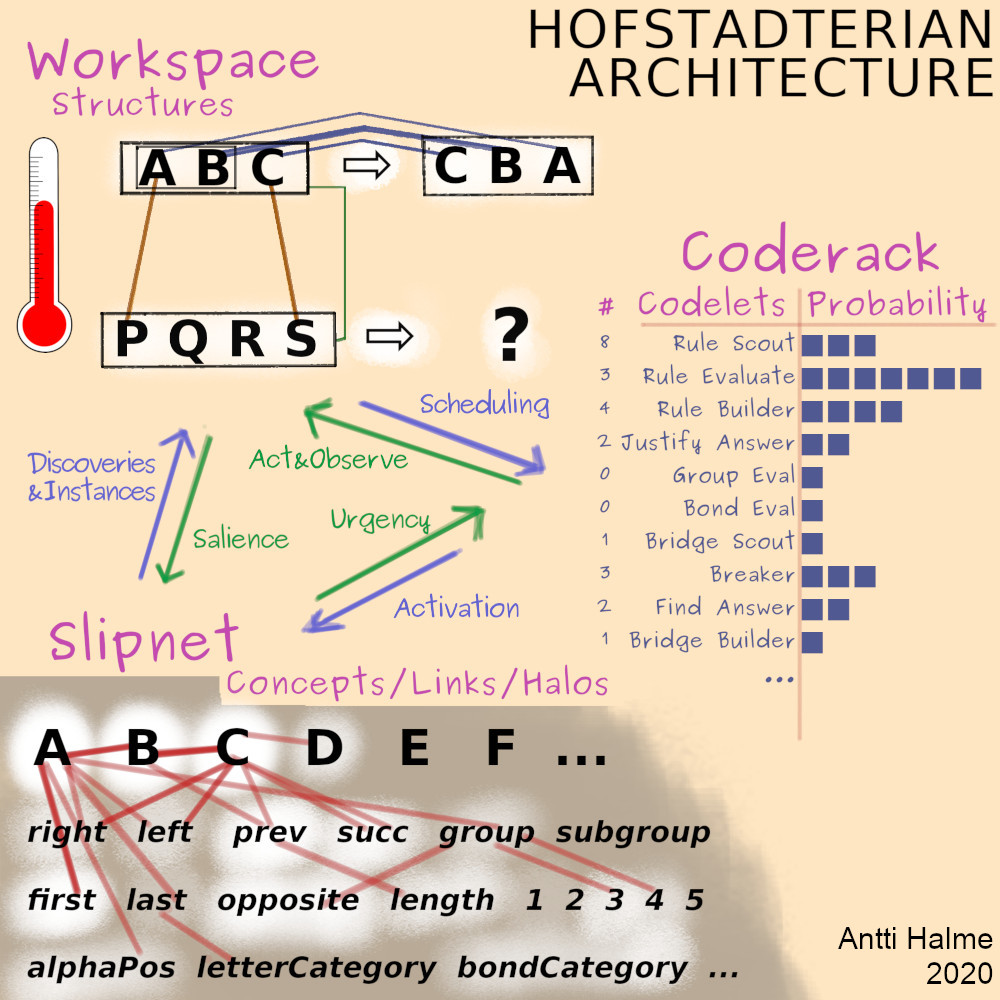

In my view all FARG systems — some presented in FCCA, some developed much later — are variations of the same idea in different guises. This approach to system design is what I call Hofstadterian architecture. It is made up of a set of distinct components that work closely together to form a web of complex feedback loops and operational pressures that guide program execution in highly focused microdomains.

Overview

In essence, Hofstadterian architecture is a certain kind of a metaheuristic multi-agent search technique that uses randomness and parallel (or strictly speaking concurrent) processing to model and simulate what seems to happen when humans perform certain highly focused and specific cognitive tasks. I'll unpack this formulation a little.

Many computational tasks can be modelled as search problems. Search algorithms either retrieve data stored in a data structure or simply calculate the corresponding entries in the search space of the problem domain. Search is about finding a match to a particular query.

For example, organising the seating arrangement at a wedding can be thought of as a search problem. Planning a working layout is an exercise in finding a set of seat designations that meet certain soft adjacency rules, table size constraints, individual preferences, and so on. Different seat arrangements can be viewed in terms of instances of a data structure or as the corners of a search space, and so the task is to find, by some process, a configuration that is in some particular sense as good as possible.

Heuristic search is an enhanced form of search, where the idea is to exploit knowledge about the problem domain and to focus search efforts in a fruitful direction or area. Without heuristics, the best one can do is be systematic and go through every option, every combination. Heuristic search is informed search, where the algorithm can prioritise different possible options to evaluate. Some particularly insightful heuristics even allow the process to completely discard certain areas of the search space.

Coming back to the example, in Western culture seating arrangements where the bride and groom are not seated close to one another are unlikely to meet the objectives that determine which arrangements are preferable over others. Because of their obvious unsuitability, they need not even be considered.

Metaheuristics, then, is simply heuristics about heuristics, a higher level procedure by which the primary search heuristics are found or selected or parameterised: search in the space of heuristics. In the wedding example, fairness or balance could be a metaheuristic, where having equally good seating for the guests of the bride and the guests of the groom can guide the selection of which primary heuristics to rely on for finding the arrangement proper. Similarly vocal interference from mother-in-laws can result in certain arrangement heuristics being preferred over others in the final scoring: loud noises do not help with the search, but have an effect on how the search is carried out.

Parallel processing is about sharing computational work with multiple processors to shorten the wall clock time that it takes to finish the job as a whole. Crucially, this adds no new capability over a singular processor. The result of the calculation is always the same. I make a distinction between parallel and concurrent processing, because I want to emphasise the co-existence of multiple processors and the communication between them.

If co-executing processors are completely isolated from one another and simply do a share of the work, the parallel execution always works out the same way. However, if there's any communication between the processors, direct or otherwise, then the only way to guarantee repeatable behaviour is to control the communication and all this distributed processing extremely carefully. If there is no control or control is not carried out carefully enough, the processors may interfere with one another and the behaviour of the overall system may prove unpredictable.

(To be clear, my formulation of concurrency is not in disagreement with the fundamentals of computing and computability. Concurrency doesn't add any new capability per se: I'm merely saying that a faithful simulation of an uncontrolled concurrent process would required encoding more of the processors' shared world state and environment that is specified in the original problem formulation. I'm interested in computation in the real world, so I'm willing to use a shorthand to claim that concurrent processing does something more than mere parallelism.)

Multi-agent systems can be thought of as an attempt to leverage this concurrent interference and the broader work sharing context by explicitly modelling the processors as entities — agents — with their individually executing and evolving programs. Typically all agents run the same program, and diverge only in terms of their share of the workload and their position and role in relation to other agents. In the Hofstadterian model, however, the agents run mostly different snippets of code, as we'll see. Some multi-agent systems feature agents with full life-cycles, they come and go, while others are so tightly structured that they effectively function like vanilla parallel executions.

In the running example, the seating arrangement can be done entirely in parallel by dividing the guests in groups of ten, and giving each group to a different planner to lay out over the tables they oversee. Concurrency could manifest through a conversation between the planners, or simply by having everyone work on a shared set of tables at the same time. One planner might not be able to place a guest in a certain seat, because another planner beat them to it, but further discussion and negotiation might change things.

Finally, the notion of randomness is built in to the process. I would again adopt a slightly non-standard distinction between explicit randomness, where some true random value generating procedure (backed by true noise or not) is invoked to help with decisions, and implicit randomness, which works in the temporal domain and is caused by non-ideal processes carrying out the concurrent execution. One can get randomness in an application through either one. In my view Hofstadterian architecture has features of both explicit and implicit randomness.

Randomness can also feature in the problem itself, if the task is to plan under uncertainty. Some guests might not be able to make it to the wedding after all.

Microdomains

Before diving into the internals of Hofstadterian architecture, a word on the context in which Fargonauts employed their systems.

All the way through FCCA, Hofstadter argues in favour of making use of microdomains in cognitive science research. This is both a statement of his own vision for a model of human cognition as well as a reaction to the prevailing AI orthodoxy of the era. Chapter 4 of FCCA in particular is a forceful attack on the AI methodology of the day and what the authors — David Chalmers, Robert French, and Hofstadter — saw as a strictly inferior and absurdly biased model of cognition that bypasses the actual problem.

In Hofstadter's world, the secret to cognition is analogy-making. The manner in which we make connections between concepts and things, both the obvious kind and the more outlandish ones, shapes our thinking. Analogies are links between representations of concepts and categories, which themselves expand as part of the linking process. In the world of cognition and AI, this line of thinking quickly leads to questions about the nature and structure of these representations. For Hofstadter, however, the real problem of cognition is a slightly different one, that of perception.

Hofstadter and his students argue in their writing that the AI community is wrongly obsessed with the question of structure. The symbolist tribe — as exemplified by programs such as the early Logic Theorist (Newell & Simon, 1956) and the General Problem Solver (Simon, Shaw, & Newell, 1959) as well as BACON (Langley et al., 1987) and the Structural Mapping Engine (Faklenhainer, Forbus, & Gentner, 1990) — is effectively delusional. The authors of these programs make grand, misleading claims about their systems' powers. These programs are supposed to faithfully represent complex phenomena in the real world, and by sheer symbolic reference manipulation be able to establish theories and analogies, find analogical mappings and even derive conclusions.

The problem is that the symbolists frequently bypass the messy problem of perception by feeding into these programs precisely the data they require and in precisely the right manner of organisation. In other words, the symbolists ignore the high-level problem of perception entirely. The argument goes that one might eventually be able to simply plug in a "perception module" and, voilà, we have cognition. The symbolists use hand-coded representations "only for the time being".

The Fargonauts take a completely different stance. In their thinking cognition is analogy-making, and analogy-making is built on top of high-level perception: cognition is infused with perception. High-level perception requires solving the problems of relevance and organisation simultaneously. Of all the data available in the environment, what gets used in various parts of representational structures? How is data turned into these representations? In other words, the main high-level problem worth solving is precisely the one the symbolists ignore.

This brings us to microdomains. Hofstadter and his students argue that high-level perception is built on low-level perception, but the latter is currently (1992) so challenging, that for the time being, modelling the real world must remain a distant goal. If one restricts the domain to something far simpler than the real world, some understanding might be within reach. In a microdomain it might be possible to explore low-level perception that is comprehensive enough to give a firm foundation to the higher mechanisms of analogy-making.

Microdomains are superficially less impressive than "real world" domains, but through their idealised nature they may yield valuable insights. All FARG systems explore this frontier between perception and analogy in search of patterns of interaction that could capture some aspects of the fluidity of human thought.

"Concepts without percepts are empty; percepts without concepts are blind.

Principles

In the conclusion of Chapter 4 in FCCA, Hofstadter argues that high-level perceptual processes lie at the heart of human cognitive abilities. Cognition cannot succeed without processes that build up the appropriate representations. Before studying the components in detail, we need to consider the principles on which FARG systems were built.

To make sense of the principles, we need to first establish some FARG terminology:

-

Perception: The process of making sense of the environment; finding the signal in the noise, the order in chaos.

-

Representation: "The fruits of perception", raw data turned into useful structures by a process of filtering and organisation.

-

Concept: Notionally the Platonic ideal, the essence of a thing as informed by available representations; in FARG systems something to the tune of a pointlike node embedded in a graph or network of its peers, with a halo of connectivity around it.

-

Analogy-making: The process of making new connections between concepts; concept expansion.

-

Cognition: Making use of the concept graph through perception from the bottom up and by analogy-making from the top down.

Principles of Hofstadterian architecture:

-

Inseparability of perception and high-level cognition: Focusing on perception will help us make progress on the puzzle of cognition.

-

Gradual build-up of representations: Representations are generated and developed through a sequence of small, incremental changes powered by observations of the environment.

-

Easy reconfiguration of representations: Cognitive representations operate at multiple levels simultaneously and are held together by various kinds of links. High-level perception can be thought of as the process of maintaining and upgrading these structures.

-

Influence: Concepts have influence over other concepts, as does the broader context. This top-down control complements the bottom-up generative process.

-

Sub-cognitive pressure: Concepts and representations have varying degrees of relevance, or importance, with respect to any particular cognitive task. Concepts are in constant competition with one another, and certain concepts end up exerting greater influence than others. This is a stochastic process.

-

A blend of pressures: There are both context-dependent and context-independent pressures at play, and their co-existence facilitates simultaneous bottom-up and top-down processing.

-

Integration: Perception and concept processing and analogy-making are all functionally integrated and intertwined, rather than separate modules in a linear pipeline.

-

Concurrent execution: There are many ways in which the overall search or execution can proceed, and instead of systematically scanning all of them, there is some value in their concurrent exploration. Many search "heads" are in operation at the same time, and the randomness (both explicit and implicit) baked into the system helps the search branch out. The search agents distinguish themselves and may exhibit varying rates and modes of operation as the system evolves.

-

The centrality of analogy-making and variations on a theme: High-level cognition is analogy-making.

-

Varying degrees of sensitivity to pressure in representations: Some representations are deep and immune to context, others are shallow and capable of changing shape under pressure.

-

Transience and versatility of representations: Representations can take many shapes and influence concepts in many ways. Representations come and go, concepts tend to stick around.

-

The crucial role of the inner structure of concepts: "Conceptual neighbourhoods", the distance and overlap between concepts, and conceptual depth all contribute to the overall task — concepts have dimensionality.

Example: Copycat

The essential mechanisms of a Hofstadterian architecture are perhaps best exemplified by the Copycat system, a computer program designed to be able to discover "insightful analogies" in a domain made up of alphabetical letter-strings. Copycat formed the core of Melanie Mitchell's PhD thesis (Mitchell, 1993), an attempt to model analogy-making in software, but in a "psychologically realistic way".

Copycat works with problems like these:

If I change the letter-string abc to abd, how would you change ijk in "the same way"?

If I change the letter-string aabc to aabd, how would you change ijkk in "the same way"?

The challenge of the task lies with the term "same". For the first case, most humans prefer the answer ijl, based on the perceived rule "replace the rightmost letter by alphabetic successor". Other options include ijd, insisting on a rigid rule replacement-by-d, or even ijk, the null-operation following rule "replace every c with d". In the extreme, answering abd would follow from the rule "replace by abd regardless of input". The point is that out of many valid answers, we have certain preferences.

The second problem should highlight the subtlety and the aesthetic dimension of the task. Rigidly applying the leading rule from the first case would yield ijkl, but it appears rather crude compared to ijll, which evolves the rule into "replace the rightmost group by its alphabetic successor*.

Moving from "rightmost letter" to "rightmost group" is an example of the kind of fluidity that is the hallmark of human cognition. The concept letter "slipped" into group under the pressure of the task.

The point here is not what is the the "right" answer: for the second problem one could make a case for jjkk, hjkk, and so on. The point is that "conceptual slippage" is how we think about these problems, and thanks to the accessibility of this microdomain, that is something a software system might be able to learn to do as well.

Hofstadterian architecture — the FARG system family — is an exploration of fluid concepts and this mechanism by which novel concept structures get constructed from observation. For Hofstadter and the Fargonauts this "conceptual slippage" is the crux of analogy-making, the fuel and fire of cognition.

Components

"The essence of perception is the awakening from dormancy of a relatively small number of prior concepts — precisely the relevant ones."

Entities

As Hofstadter and his students were interested in studying conceptual slippage, the concepts that made up the microdomains were, by themselves, not that important. It was enough to have some relevant concepts, and enough structure in them to allow for gradual changes. In other words, no FARG project attempted to derive concepts from data or from some virtual environment: all relevant concepts were given to the programs beforehand. While this is hardly better than what the symbolists were up to, at least here the researchers are not claiming to be coming up with new concepts!

One can imagine systems that try to derive their entities from the data they work with, but in terms of being of interest to humans, systems of this kind need to have some grounding in what humans find interesting. Some might say this is rigging the game too much, but on the other hand without a shared vocabulary, we would struggle to understand or appreciate what the systems were doing. We would like to understand what the system is doing, so we teach it our concepts.

For the Copycat letters domain, the concepts were built around the notion of a word as a sequence of letters. This yields concepts such as: the ideal letters of the alphabet, letter, successor and predecessor, same and opposite, left/right neighbour, leftmost/rightmost, group of adjacent letters, starting point, and so on. These are chiefly local properties, as opposed to things like the fact that letter t is eleven letters behind i, as humans tend to not find such things interesting. The special status of letters a and z is included, as are some small integers to facilitate basic counting. In the Copycat system, there are a total of just 60 concepts or so.

Slipnet

The Slipnet is the home of all permanent Platonic concepts. In a complete FARG system, it functions as the long-term memory unit, the repository of all categories. Specifically, the Slipnet contains object types, not instances of these types. Furthermore, the Slipnet is not merely a storage system, but a dynamic structure that evolves over time as the computation proceeds.

Structurally, the Slipnet is a network of interrelated concepts, with each individual concept being represented not simply as a node in the network, but more as a diffuse object centred on a node. Instead of a set of pointlike entities, concepts in the Slipnet are to be taken more as a collection of overlapping "halos", or clouds. This is similar to a probabilistic mixture model setup: the way in which the concept halos overlap is at least as important as where the concepts are "anchored".

Concepts in the Slipnet are connected by links, numerical measures of "conceptual distance", which can change over the course of a run. It is these distances that determine, at any given moment, what conceptual slippages are likely and unlikely. The shorter the distance, the more easily pressures resulting from the processing can induce slippage between two concepts.

The Slipnet plays an active role in every execution, reflecting the way in which concepts interact and contribute to the overall heuristic search effort. Nodes gain and lose score on a continuous relevance metric called activation, and in the process spread some of this activation towards their neighbours. In aggregate, the activations form a time-varying function that represents the situational understanding of the program as it executes. This situational picture then drives changes in the links between concepts, and, ultimately, the conceptual slippage mechanism.

Activation flow is beyond the scope of this exposition, but the essence of it is that nodes spread activation based on distance to neighbouring concepts. Nodes also have a characteristic decay rate which results in differences in the influence of individual concepts. The links themselves come in many shapes, and are in fact concepts themselves, providing labels to the links. The links pull and push concepts closer and further apart, but don't change the fundamental topology of the Slipnet. No new structures are ever built or old structures destroyed, the concepts are fixed in that sense.

In our running example, concept successor can provide the label for a link between right and left. As the successor concept gains activation, it will pull right and left closer to one another, rendering potential slippages they represent more probable. Recall that nodes are more cloudlike than pointlike: the probability is fully captured in the overlap of the concept clouds, the aggregate density.

From this point of view, the Slipnet would appear to have soft edges, but at the same time the existence of a discrete conceptual core is crucial for slippage, the Fargonauts argue. Fully diffuse regions would not permit discrete jumps, as there would be no meaningful starting and ending point. The cloud must have a centre.

"The centre is not the centre."

Each node in the Slipnet has a static feature known as conceptual depth, a measure of abstractness. For the Copycat case, for example, the concept opposite is deeper than the concept successor, which itself is deeper than the concept of the letter a. Loosely speaking, depth determines how likely concepts are to be directly observed. If a deep concept is heavily involved in a situation, the interpretations is that it is likely contributing to what humans would consider to be the essence of a situation. In other words aspects of deep concepts, once observed, have greater influence.

Like the concepts themselves, depth is assigned a priori by the researchers and considered innate. Again, the purpose of FARG systems is not to determine depth information from data (about the environment), but to study slippage behaviour. Depth does not imply relevance, of course, but Hofstadter and his students found that the additional leverage provided by depth helped systems zero in on rules humans found natural. Additionally, deeper concepts are more resistant to slippage. In other words, conceptual depth is, in a sense, a human bias term.

The sum total of all these dynamics is that the Slipnet is constantly changing "shape" to better fit the situation at hand. Conceptual proximity is context-dependent, and varies over time. The FARG argument goes that through all this flux, the concepts, as reified in the Slipnet, are more emergent in nature rather than explicitly defined.

"Insightful analogies tend to link situations that share a deep essence, allowing shallower concepts to slip if necessary. Deep stuff doesn't slip in good analogies."

Workspace

The Workspace is the short-term memory component in Hofstadterian architecture. If the Slipnet represents the space of concepts, the Workspace is where those concepts are put to work in the form of instances. The Workspace is the "locus of perceptual activity", the construction site in which dynamic structures of many sizes are simultaneously being worked on. This is similar to the notion of a "blackboard" in some distributed systems.

In the case of Copycat, the Workspace is filled with temporary perceptual structures such as raw letters, descriptions, bonds and links, letter groups and bridges. The Workspace is a busy, deeply concurrent building environment, not unlike the cytoplasm of a biological cell.

In the beginning, there's only disconnected raw data in the Workspace, the initial configuration that specifies the situation at hand. In the case of Copycat, this would be each input letter individually and basic details of order. The idea is that these initial seeds grow and link together to form perceptual structures and gain descriptions based on applicable Slipnet concepts.

Objects in the Workspace evolve under various pressures as the program executes. Not all objects receive an equal amount of attention. The likelihood of any given object being transformed by a particular operator is given by its salience, a function of the object's importance and its "unhappiness".

Importance increases with the number of associated descriptions a given object has and the level of activation of the corresponding concepts. Unhappiness is a relational measure, determined by the degree of integration a given object has with other objects. Unhappy objects are less connected, and so "cry" for more attention.

In other words, salience is a probability distribution over all the objects in the Workspace; a function of both the previous Workspace state and the state of the Slipnet.

The principal activity in the Workspace is bond-making, the process by which relationships between two objects are discovered and made concrete as inter-object bonds. This is not a uniform process, but rather goes on at different speeds for different kinds of relationships. The purpose of this mechanism is to reflect the way in which human perception is biased towards certain observation over others. In the Copycat domain of letter strings, we notice, for example, repeated characters before successors and predecessors. Every bond, once established, has a dynamically varying strength, a function of concept activation and depth as well as the number of similar bonds nearby.

Object bonds of the same type are candidates for being "chunked" into a higher level kind of object called a group. For example, in Copycat, a and b might be bound together, and separately b and c: these two bond pairs of similar type can give rise to the group abc. Groups, once established, acquire their own descriptions, salience values, bond strengths and so on. Naturally, this grouping process can continue to more levels. This the foundation of hierarchical perceptual structures, all built "under the guidance of biases emanating from the Slipnet".

Similarly, pairs of objects in different frameworks — letter-strings in Copycat — can be matched to give rise to bridges, or correspondences in the Workspace. A bridge establishes that two objects are counterparts: the objects either are the same, or serve an analogous role in their respective contexts. For example, aab and jkk both have a sameness group of length two, and so a bridge can be reified to explicitly capture this correspondence. The fact that one sameness group is at the beginning and the other at the end is not a deal-breaker: conceptual slippage makes the mapping possible.

Identity mappings can always form a bridge, but conceptual slippage must overcome some resistance based on the complexity of the proposed bridge. Highly overlapped shallow concepts slip easily, bridging deeper and more removed concepts is more difficult. Tenuous analogies are less likely to hold. This is captured in the strength of the bridge once constructed.

The purpose of all this is bridging is of course the controlled, coherent mapping between frameworks, that is, the input mapping and the query in the Copycat case.

As the construction effort in the Workspace advances and complexity rises, there is increasing pressure on new structures to be consistent with those already built. This is something like a global vision of the build effort, or a viewpoint. The Workspace is not just a hodgepodge of structures, but something more coherent. There's an organisation to action, just like in a colony of ants or termites. The viewpoint is biased towards certain structures over others, as a function of both the concepts at play and the state of the Workspace. Viewpoints themselves can evolve, and it is specifically this radical change that gives rise to the most interesting global behaviour.

While the Workspace is busy establishing a viewpoint and going about construction, the Slipnet is responding to it and changing shape. As concept activations come and go with workspace structures, deeper concepts may end up being invoked, which has and effect on the Workspace, and so on. Deep discoveries in the Slipnet have lasting impact, shallow discoveries are more transient in nature. Remarkably, the manifest goal-orientation of programs like Copycat emerges from this somewhat mechanical concurrent pursuit of abstract qualities.

"Metaphorically, one could say that deep concepts and structural coherency act like strong magnets pulling the entire system."

Coderack

The Coderack is a "waiting room", where Workspace operators — codelets — wait for their turn to run. Conceptually, it's close to an "agenda", a queue of tasks to be executed in a specific order, but crucially, in Hofstadterian architecture codelets are drawn from the Coderack stochastically instead of being organised in a deterministic queue.

Everything that happens in the Workspace is carried out by codelets, these simple software agents. Every labelling and scanning and bonding and bridge-building and disassembling is one small step in the overall execution. As with ant colonies and termites, whether a given codelet runs here or there or even at all is not really important. The magic happens when you take a step back and study the collective effect of many codelet operations together over time.

There are two kinds of codelets: scouts and effectors. Scouts look for potential actions in the Workspace, evaluate the state of a particular structure or all the frameworks as a whole, and then create or schedule more codelets to be run. Effectors actually do the work, either creating or taking apart structures in the Workspace. Through sequences of codelet runs, the overall program can "follow through" and indirectly carry out more elaborate operations.

For the Copycat example, a typical effector codelet could attach a description 'middle' to the b in abc, or bridge the aa in baa to the kk in kkl, and so on. Afterwards, a scout codelet could stop by, take notice of a label or a bridge and schedule more codelets to consider the other letters in the string, plus another scout to see what could be done next.

When codelets are created or scheduled, they get placed in the Coderack to wait with a certain urgency value. Coderack is a priority queue of sorts, but more like a stochastically organised pool. The urgency value determines the probability with which the codelet is selected from the pool and is a function of the state of the Slipnet and the Workspace. For example, if a Slipnet concept is only lightly activated, the scout codelets related to it probably get scheduled with less urgency. Put another way, urgency is less of a priority, more of a speed of execution.

Codelets can be divided into bottom-up and top-down agents. Bottom-up codelets, "noticers", are more opportunistic and open-minded, while top-down codelets, "seekers", are looking for specific things, such as particular relations or groups. Codelets can be viewed as proxies of the pressures currently in the system. Bottom-up codelets represent pressures that are always there, while top-down codelets are sensitive to the pressures of the particular situation at hand. Indeed, top-down codelets are spawned "from above" by sufficiently activated nodes in the Slipnet. The idea leads to action, to further processing.

Codelet urgency is indirectly a function of pressures, both from the Workspace direction as well as from the concepts in the Slipnet. In a sense the Workspace tries to maintain a coherent construction process, while the Slipnet pushes the system to explore new things, to realise trending concepts. However, neither kind of pressure is explicit anywhere in the architecture, but rather diffuse in urgencies and activations and link-lengths and more.

The pressures are the emergent consequences of all the events that take place in the Slipnet, the Workspace, and the Coderack. Individual codelets never make huge contributions to the overall process, but their collective effect develops into the pressures.

Initially, at the start of a run, a standard set of codelets with preset urgencies is scheduled in the Coderack. At each time step, a codelet is chosen to run and is removed from the current population of the Coderack. From there, randomness takes over and each run evolves in its own way based on which codelets end up getting attention.

The Coderack is kept populated through a replenishment process with three feeds: 1) bottom-up codelets are continually being added back as they are removed, 2) codelets themselves can add follow-up codelets, and 3) the Slipnet can generate more top-down codelets. Codelet urgency is assigned at creation time as an estimate of the "promise" that the codelet is showing at the time. Bottom-up codelet urgency is context-independent, follow-up codelets are urgent relative to progress made, top-down codelets are urgent relative to concept activation, and so on.

The overall effect is that the Coderack, together with the Slipnet and the Workspace, forms a feedback loop between perceptual activity and conceptual activity. Workspace observations activate concepts in the Slipnet, and concepts bias the directions that perceptual processes explore. There is no top-level executive directing the overall effort.

The analogy is to biological systems, particularly the cell with its cytoplasm and enzymes and DNA genomes with varying expressions, dormant genes, and more. In the cell, the overall effect amounts to a coherent metabolism. The idea in these software systems, this elaborate evolutionary heuristic search, is to establish order in a similar way.

Parallel Terraced Scan

"Parallel terraced scan" is the term the Fargonauts use to describe the overall heuristic search carried out by the system. Parallelism here refers to concurrency, the idea that there are many "fingers" (or "heads") carrying out the search simultaneously. These fingers feel out potential directions in which the search could proceed, guided by the aggregate pressures in the state of the program at any given point.

Crucially, the fingers are not actual objects or events in the system, but rather the manifestation of different scout codelets getting selected for execution. Pressures lead to a codelet distribution that selects the scouts at different rates, resulting in a search where each finger advances at its own pace. The state of the workspace is then a result of this "commingling".

There are many potential directions in which the search can proceed: some get selected for exploration, while others may never gain sufficient support. The most attractive directions are probabilistically selected as the actual directions. Hofstadter offers that this is in agreement with how the human mind is believed to work: unconscious processes in the mind give rise to a unitary conscious experience.

The other analogy Hofstadter offers is to a colony of ants. There are "feelers" on the fringes of the colony doing the scouting, but then immediately behind the vanguard there's a terraced structure, later exploration stages. Each search stage probes a little deeper than previous one. The first stages are cheap in computational terms, and all kinds of options can be explored. Later stages cost more and more, and the system has to be increasingly selective. Only when a promising direction has been deeply explored and found fruitful do the effector codelets gain currency.

Pressures and Biases

At the heart of Hofstadterian architecture is the idea that conceptual fluidity is an emergent phenomenon that arises from a "commingling of pressures", the complex interaction of competing and cooperating computational elements. These components all operate simultaneously, directing the overall search process together. The codelets, each with its own small probability of getting run, serve as the agents or transmitters of these guiding pressures, pushing and pulling the search in different directions for every execution.

Hofstadter emphasises that the pressures are not to be taken as pre-laid-out processes, but rather in probabilistic terms. Conceptual pressures take the shape of evolving concepts or clusters of concepts trying to make themselves part of the situation. Workspace pressures are like competing viewpoints trying to establish themselves. Running codelets can advance (or hinder) numerous pressures. It might appear to us like there's a predetermined high-level process at play, a grand plan to it all, but this is merely an illusion. We are simply picking up on patterns as they emerge from lower level interactions.

This means that nobody is in charge. No unique process can be said to have driven the search to whatever goal it ended up in. If we choose to step through the parallel execution, we might be able to impose some order and see where critical changes happen, but this kind of dissection leaves plenty of room for interpretation. Hofstadter and Mitchell point out that untangling the effect of various pressures would be like studying a basketball game at the level of minute individual movements by all the players on court simultaneously.

The overall search process has a natural arc. In the beginning there are only bottom-up codelets available on the Coderack, the initial codelet supply. As the system runs and small discoveries are made, the Workspace slowly fills up with structure, and at the same time certain concepts gain activation in the Slipnet. Over time, more and more situation-specific pressures get produced and so top-down codelets eventually come to dominate the scene.

The Slipnet starts off as "neutral", without situation-specific pressures. Over the course of a run, the Slipnet picks up a bias towards certain concepts and concept constellations — themes. These themes then guide the urgencies of different codelets, steering, for their part, the overall proceedings. An "open-minded" implementation is willing to entertain any theme at the outset, becoming more "closed-minded", or more strongly biased towards the end. The top-down pressures control the biases, which influence exploration direction. They can only do so much, though, and must yield to the actual situation.

From another point of view, the transition that happens over the course of a run is from local changes to global effects. The scale of actions grows with new discoveries, as operations get run on ever larger, more complex and coherent structures in the Workspace. Concepts evolve, growing from shallow to deep as themes emerge. As the composition of components changes, so changes the style of processing, from early non-deterministic volatility to more deterministic, orderly behaviour towards the latter stages.

Temperature

In addition to the gradual evolution of high-level perception, the Fargonauts wanted to capture the essence of paradigm shifts — the "Aha!" moments — in their software. In the Copycat microdomain this would amount to unexpected or subtle answers. The authors briefly argue that these can only emerge in cases where there's a snag, a conceptual block that prevents a situation from evolving in the direction of its trend. In these cases, the system must sharply focus attention to the problematic encounter, and be unusually open-minded.

In Hofstadterian architecture temperature regulates the system's open-mindedness. In the beginning the search has nothing to work with, no information that could be used to drive exploration, and so internal organisation can be left loose. As more and more information is picked up, the more important it is that top-level decision-making becomes coherent and less adventurous. Things start off at a high temperature, there's a lot of movement, but as Slipnet concepts and Workspace structures mature, things soon cool off. What starts off as a Wild West evolves over time into more of a conservative settlement.

Temperature is controlled by the degree of perceived order in the Workspace. Hofstadter and Mitchell present temperature as an inverse measure of structural quality: the more numerous and strong the structures in the Workspace are, the lower the temperature. In a typical run, temperature goes both up and down, reflecting the inherent uncertainty of the search process.

Concretely, temperature controls the degree of randomness used in decision-making. At low temperatures differences in object attractiveness remain sharp, while in higher temperatures less attractive objects get picked up roughly as often as their more attractive peers.

In simulated annealing, temperature is only used as a predetermined top-down randomness control, but in Hofstadterian architecture, temperature serves a vital feedback role. Temperature in Copycat is a quality control mechanism, where the system monitors itself and adjusts its willingness to take risks.

Notably, temperature is a true measure of system state. The Fargonauts discovered that the final temperature of the system is a proxy for the quality of the answer the system was able to come up with. The logic goes that strong, coherent structures lead to both plausible answers and low temperatures.

Higher Perception

Shades of grey

As previously discussed, Melanie Mitchell's Copycat is an analogy-making program operating in the letter-string domain. Like many other FARG projects, Copycat was designed and purpose-built for the study of conceptual fluidity, the ability to liken certain situations to other situations.

In Hofstadterian architecture scalable fluidity, of the kind that could power cognition, is founded on the idea that concepts need not be discrete and binary. There's no need to chop down situations so that they fit neatly in sharp-edged formal data structures. Concepts don't have to be sharply and irreversible cut from one another. A certain degree of blurring, or "shades of grey", is possible. Indeed, cognition requires that concepts can be invoked partially.

Partial invocation of concepts drawn from a large pool requires an organising principle, which in human cognitive terms could be described as 'attention', or even 'the mind's eye', as Hofstadter offers. We direct our discerning gaze at what we feel is the core of a given situation, bringing along some things and leaving others behind. This applies to both ideal concepts as well as concrete examples, which blend together in our perception of the real world.

In Copycat, partial invocation of things is due to the probabilistic parameterisation of the entities at play. Everything could in principle have an active role, but only some things get randomly chosen to participate. There a probability distributions everywhere: Slipnet concepts have their activation, Workspace constructs have their salience, and Coderack codelets their urgency. There are dynamic, variable dimensions of operation at every level of the architecture. At any given moment, the overall search effort is focused in certain areas at the expense of others.

It is these shades of grey that ultimately give rise to fluid concepts, the emergent patterns of cognition.

Beyond Copycat

In his afterword to Mitchell's expanded Copycat treatise Analogy-Making as Perception: A Computer Model (1993), Hofstadter wrote about the next steps to take with fluid concepts under the title "Prolegomena to Any Future Metacat". The short piece is included in FCCA as Chapter 7 and stresses the importance of concepts in the study of cognition, and particularly the way in which concepts "stretch and bend and adapt themselves to unanticipated situations".

Unlike many researchers of the era, Hofstadter believed that perception and cognition are inseparable. In fact Copycat's limited cognition is all perception. The execution of the search founded on perception and constant re-perception of the overall situation. In Hofstadter's view this is the crux of creativity. Copycat, the Hofstadterian architecture, is therefore really a model of creativity.

Looking beyond Copycat, Hofstadter realised that the way the system handles cognitive paradigm shifts is "too unconscious". Copycat is aware of the problem, but less so of the process it was working through, and the ideas it was working with. Copycat kept hitting snags, or dead-ends, repeatedly, in a way that humans do not. For a task like the Copycat game, people are generally aware of what they are doing. Hofstadter wanted to see more self-awareness in his programs, to get them somehow on the "consciousness continuum", to demonstrate rudimentary consciousness.

Hofstadter speculates that brains' consciousness is due to their special organisation, in two ways in particular. First of all, brains possess concepts, allowing complex representations to be built dynamically. Secondly, brains are able to self-monitor, which allows for an internal self-model to arise, enabling self-control and greater open-endedness. Copycat can do some kind of a job with the former, but has little to offer in terms of the latter.

Humans notice repetition in action, and get bored, while more primitive animals do not. Humans can break loops, jumping out of a system and its context. This requires not just an object-level awareness of tasks, but a meta-level awareness of ones own actions. Clearly, in humans, meta-level awareness is not a neural action, but something that occurs much higher up in the cognitive sphere.

Hofstadter gives a description of the things that a creative mind is capable of:

-

A keen sense of what is interesting: A creative mind has biases, prejudices, or discriminating taste, that allows the mind to narrow down its interest in a domain.

-

Ability for recursion: A creative mind keeps on "following its nose", making intuitive choices to go deeper or not.

-

Meta-level awareness: Watching and monitoring one's path through "idea space", being sensitive to patterns in both what is being produces as well as in one's own mental processes in the act. Sensitivity to form as well as content.

-

Ability to adjust accordingly: Modifying one's sense of what is interesting and good according to experience. Creative adaptability.

Essentially, a creative system must go through its own experience, and store relevant parts information for future use. In Chapter 7 of FCCA, Hofstadter goes on to outline five challenges for a Metacat project that would build on the Copycat success, but I won't repeat those here.

Hofstadter's ideas about Metacat were later explored by James B. Marshall in his PhD dissertation (1995). Marshall maintains a detailed Metacat website, including a VM for running Metacat.

"Metacat focuses on the issue of self-watching: the ability of a system to perceive and respond to patterns that arise not only in its immediate perceptions of the world, but also in its own processing of those perceptions. [..] Metacat's self-watching mechanisms enable it to create much richer representations of analogies, allowing it to compare and contrast answers in an insightful way."

FARG Systems

The FCCA book, Fluid Concepts & Creative Analogies (Hofstadter & FARG, 1995), presents the origin story of Hofstadterian architecture. It is by far the best resource on FARG systems and this research programme. The second best resource is probably the individual theses that the authors of these systems wrote about their work.

More recently, Alex Linhares has taken on the great challenge of collecting and organising FARG systems in a single document repository, FARGonautica. The repository has code samples and even full sources — shared as is — for many of these systems.

A brief chronology of (some) FARG systems, with their authors and domains:

-

Jumbo by Douglas Hofstadter (1983): Unscramble a jumbled word, i.e., solve an anagram.

-

Numbo by Daniel Defays (1988): Assemble a target number from given integers using arithmetic operations. Very much like the number round in British TV show Countdown. The game is known as "Le compte est bon" in France.

-

Seek-Whence by Marsha Meredith (1986): Determine the next value or pattern in a number sequence.

-

Copycat by Melanie Mitchell (1990): Pairs of letter strings.

-

Tabletop by Robert French (1992): Looking down at the object on a virtual dining table, interaction analogies with everyday objects.

-

Letter Spirit by Gary McGraw (1995) and John Rehling (2000): Creative typeface design.

-

Metacat by James Marshall (1999): Copycat, but with introspection.

-

Phaeaco by Harry Foundalis (2006): Bongard problems.

-

SeqSee by Abhijit A. Mahabal (2009): Integer sequence extrapolation, Copycat meets Seek-Whence.

-

Musicat by Eric Nichols (2012): Simulation of the process of listening to a simple melody.

References

Hofstadter, Douglas. R and the Fluid Analogies Research Group. (1996). Fluid Concepts and Creative Analogies: Computer Models of the Fundamental Mechanisms of Thought. Basic Books, Inc. Wiki GoodReads

Hofstadter, Douglas R. (2001-2009). Analogy as the Core of Cognition. Stanford Presidential Lectures series. Web PDF Video (2006) — Essential Hofstadter, for the Stanford Presidential Lectures series.

Hofstadter, Douglas R. (2001) Analogy as the Core of Cognition. In The Analogical Mind: Perspectives from Cognitive Science, Dedre Gentner, Keith J. Holyoak, and Boicho N. Kokinov (eds.). pp. 499-538. MIT Press.

Marshall, James B. (1999). Metacat: A Self-Watching Cognitive Architecture for Analogy-Making and High-Level Perception. Doctoral dissertation, Indiana University, Bloomington. Web PDF

Meredith, Marsha J. (1986). Seek-Whence: A Model of Pattern Perception. Doctoral dissertation, Indiana University, Bloomington. PDF

Mitchell, Melanie. (1990). Copycat: A Computer Model of High-Level Perception and Conceptual Slippage in Analogy-Making. Doctoral dissertation, Indiana University, Bloomington. Web

Mitchell, Melanie. (1993). Analogy-Making as Perception. MIT Press. GoodReads MIT Press

Mitchell, Melanie. (2015). Visual Situation Recognition. Video — A talk by Prof Mitchell at Clojure/West, on her research, including a demo of Copycat.

Mitchell, Melanie and Lex Fridman. (2019). Concepts, Analogies, Common Sense & Future of AI. Video — Interview by Lex Fridman, on Prof Mitchell's research, with a great segment on Copycat and concepts and things.

Sörensen, Kenneth. (2013). Metaheuristics — The metaphor exposed. International Transactions in Operational Research, 22(1), 3–18. DOI

For more information on various FARG systems, definitely check out Alex Linhares' FARGonautica.